Semantic Grounding of Novel Spoken Words in the Primary Visual Cortex

February 27, 2021

Work on embodied theories of grounded semantics (ETGS). From the abstract:

[According to EGTS,] when word meaning is 1st acquired, a link is established between symbol (word form) and corresponding semantic information present in modality-specific (incl primary) sensorimotor cortices of the brain. [Our work finds] experimental evidence [of] such a link (showing that presentation of a previously unknown word sound induces, after learning, category-specific reactivation of relevant primary sensory or motor brain areas).

I wonder if it would be feasible to test word meaning reassignment? E.g. can you force someone to believe an abstract concept means something different than they currently believe it means, and what happens then.

Also, note that all objects (mammals or human actions) they presented were already associated in the subject’s mind with existing words. What would’ve happened had they shown completely novel images (e.g. abstract art or unfamiliar objects)?

Setup

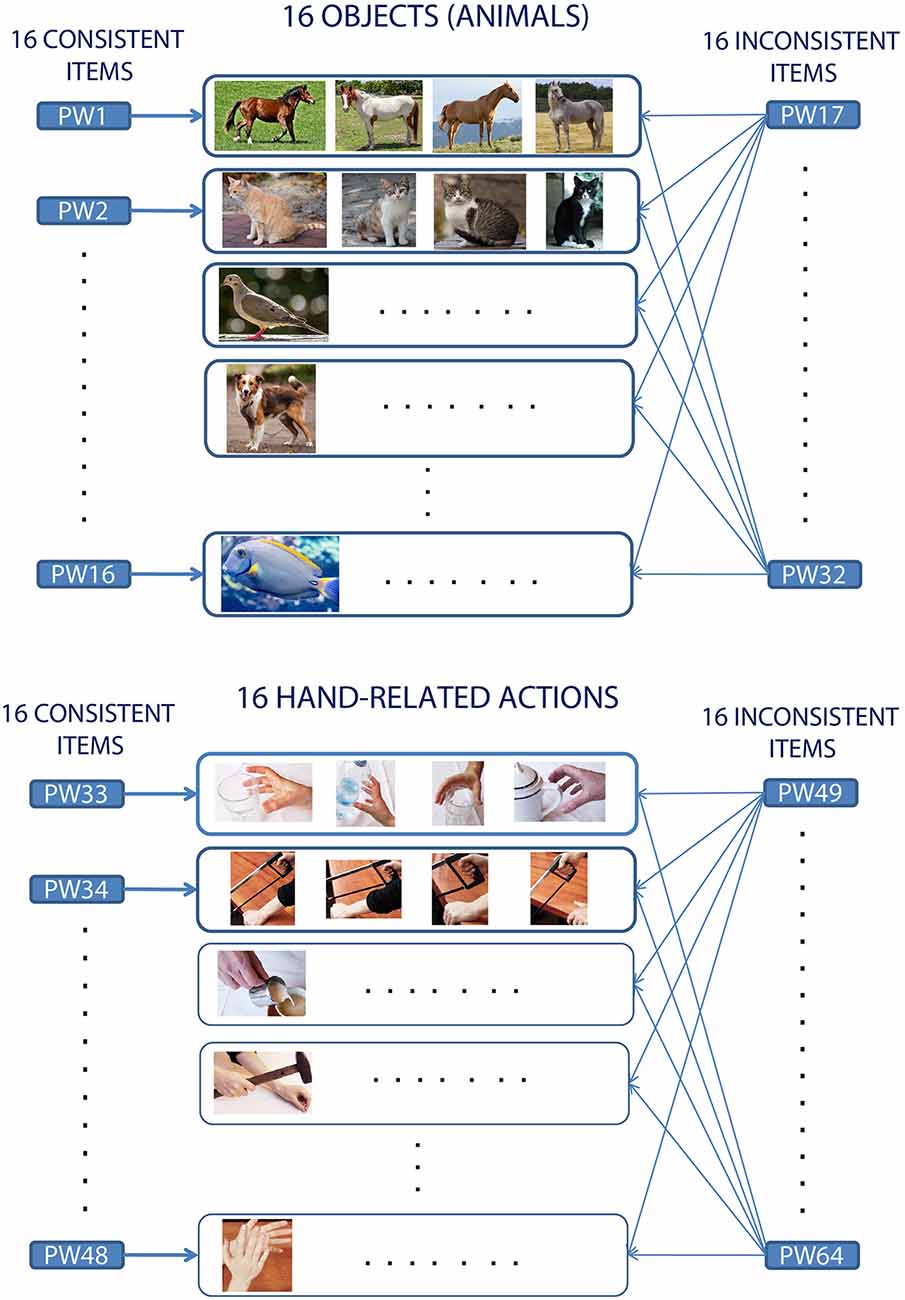

Generally these types of experiments setup some word-picture association tasks. Here, 24 participants were taught meanings for 64 spoken pseudowords (PW) by repetition over 3 consecutive days.

Mapping is generally 1:many between words and objects (consider “beauty” - face, flower, statue, artwork) - see inconsistent pseudowords:

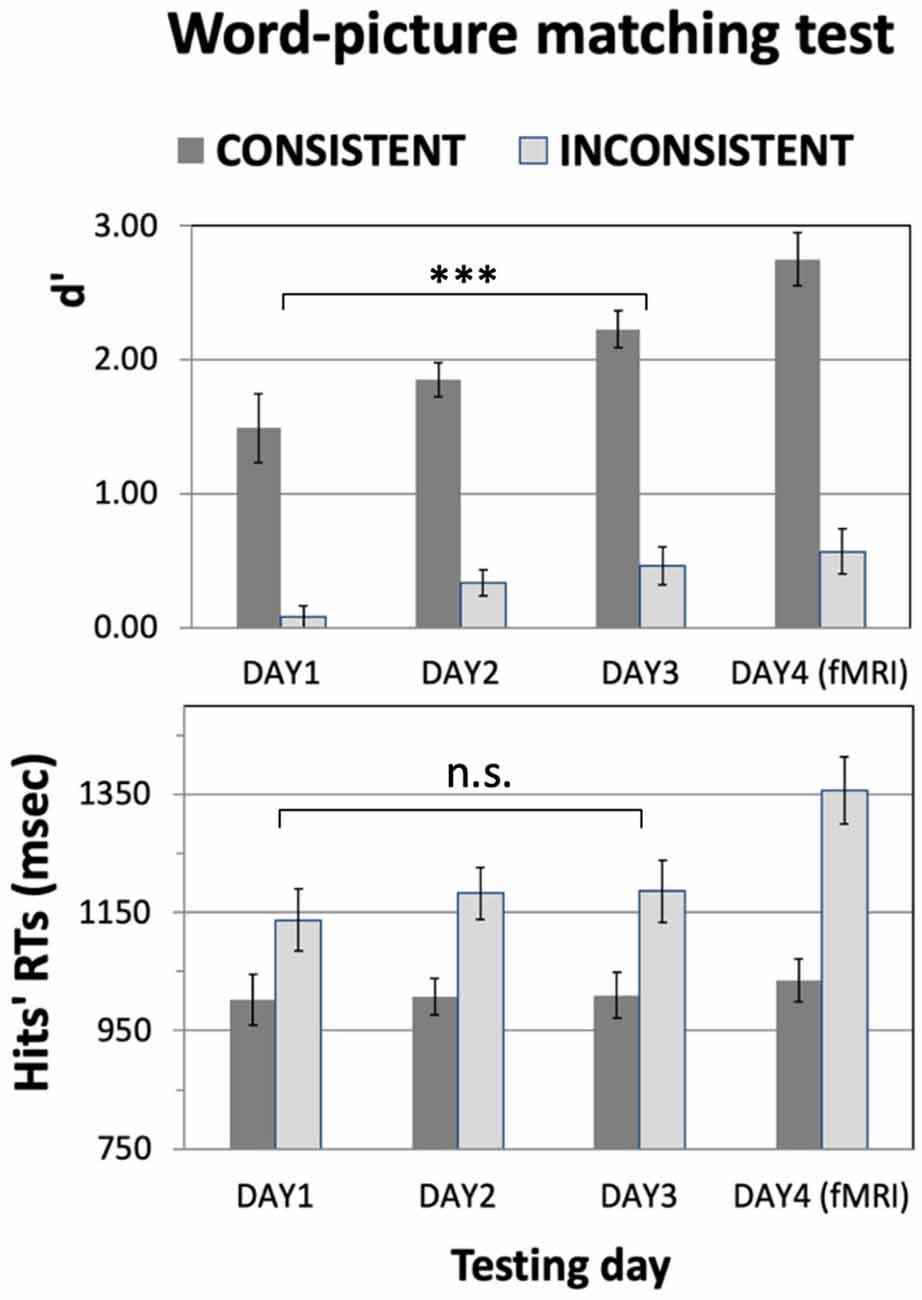

3x2x1h sessions of 256 randomly ordered trials on day 4. Participants shown a fixation cross and 2 images; need to choose which represents a PW.

We ensured that each of the 128 pictures (four instances of 16 object and 16 action types) occurred exactly eight times/session, appearing four times in a consistent- and four times in an inconsistent-word context.

Word-to-picture matching (WTPM)

Fixation cross + audio (900ms) → Two pictures (true and distractor, 3.6s) → Participant presses left/right button (which image represents word?) → Happy or sad face (500ms)

Lexical Familiarity Decision (FD)

Fixation cross (500ms) → Spoken word (900ms) → Participant presses left/right button (did they already learn this word?)

Results

Excluded subjects w/avg. Reaction Times (RTs) further than 2 SD from group mean.

Otherwise I’m not really familiar with the techniques they used for brain screening, but part of the results seem convincing. From discussion, novel word sounds (associated w/mammals) activated V1 areas; however, novel actions did not.

[Listening to novel words] activated left-lateralized superior temporal cortex and, after they had co-occurred with different exemplars from the same conceptual category (for example, four different cats), the novel sounds also sparked visual cortex, incl left posterior fusiform and bilateral primary visual cortex (BA 17) […] Intriguingly, words associated with a wide range of objects (or actions) did not significantly activate the occipital regions. These results document the formation of associative semantic links between a novel spoken word form and a basic conceptual category (i.e., that of a familiar animal), localizing, for the first time, brain correlates of the newly acquired word meaning to the primary visual cortex.